Bunny-VisionPro

Bunny-VisionPro

Real-Time Bimanual Dexterous Teleoperation for Imitation Learning

Bunny-VisionPro is a real-time bimanual teleoperation system that prioritizes safety and minimal delay. It also features human haptic feedback to enhance immersion. High-quality demonstration is collected to improve imitation learning.

Abstract

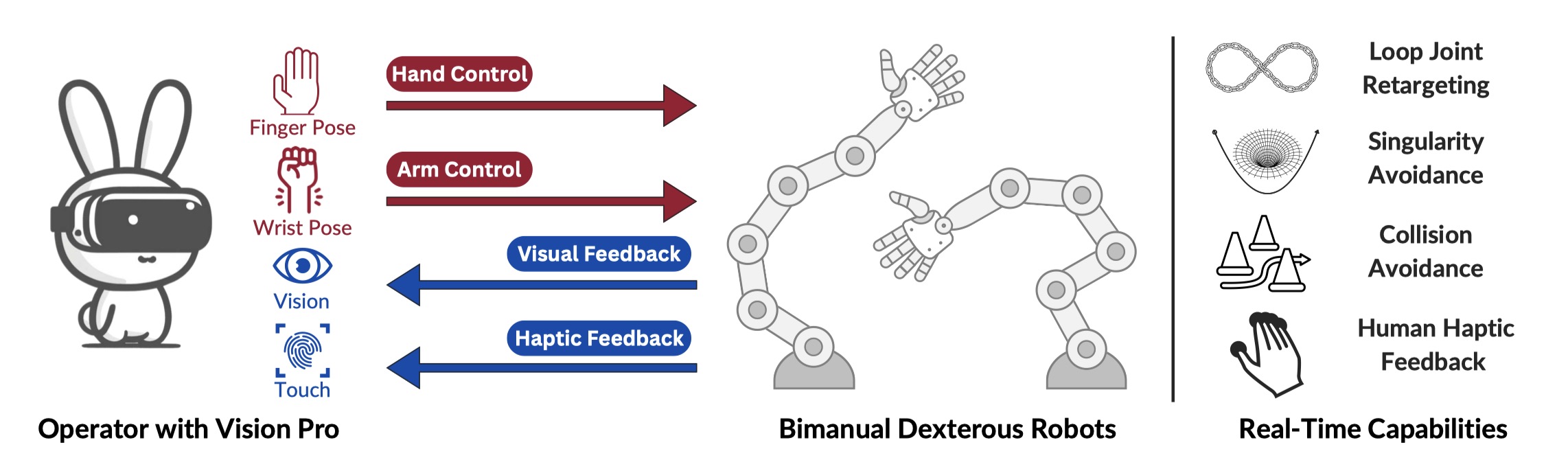

Teleoperation is a crucial tool for collecting human demonstrations, but controlling robots with bimanual dexterous hands remains a challenge. We introduce Bunny-VisionPro, a real-time bimanual dexterous teleoperation system that leverages a VR headset. Unlike previous vision-based teleoperation systems, we design novel low-cost devices to provide haptic feedback to the operator, enhancing immersion. Our system prioritizes safety by incorporating collision and singularity avoidance while maintaining real-time performance through innovative designs. Bunny-VisionPro outperforms prior systems on a standard task suite, achieving higher success rates and reduced task completion times. Moreover, the high-quality teleoperation demonstrations improve downstream imitation learning performance, leading to better generalizability. Notably, Bunny-VisionPro enables imitation learning with challenging multi-stage, long-horizon dexterous manipulation tasks, which have rarely been addressed in previous work. Our system's ability to handle bimanual manipulations while prioritizing safety and real-time performance makes it a powerful tool for advancing dexterous manipulation and imitation learning.

Skincare Teleoperation

Hand poses captured by Apple Vision Pro are converted into robot motion control commands for real-time teleoperation. We prioritize safety through singularity checks and collision avoidance, enabling the robot to perform human skincare tasks safely in teleoperation mode.

Teleoperation System

Human Haptic Feedback

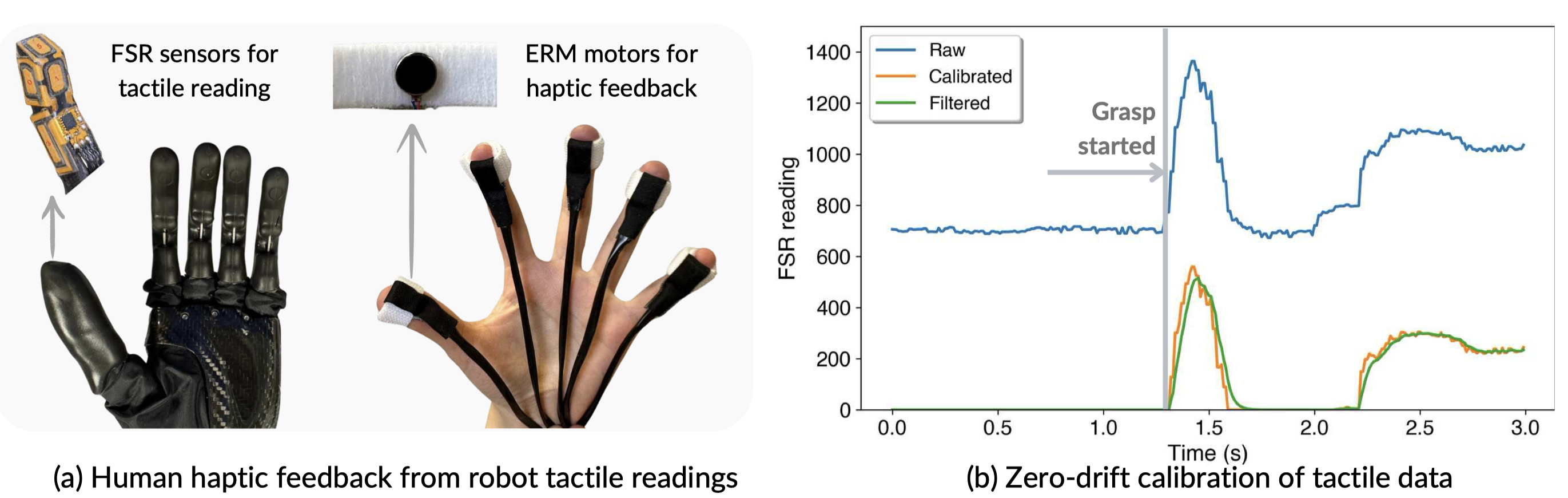

Effective human manipulation integrates visual and tactile feedback, but many vision-based teleoperation systems overlook haptic feedback. We developed a cost-effective haptic feedback system using ERM actuators to address this. It processes tactile signals from robot hands and drives vibration motors to simulate sensations, allowing operators to perceive and respond to the environment more intuitively, enhancing manipulation performance.

Haptic Feedback Demo

Haptics User Study

Imitation Learning

We evaluate the quality of demonstrations collected by our system by training popular imitation learning algorithms — ACT, Diffusion Policy, and DP3 — and testing their generalization performance in term of spatial generalization and unseen scenarios. Additionally, we train on challenging long-horizon tasks to showcase our system's effectiveness in collecting high-quality demonstrations.

Long-horizon Tasks

Generalization Results

Cleaning pan

Spatial Generalization

Unseen Object

Uncovering & Pouring

Spatial Generalization

Unseen Object

Grasping toy

Spatial Generalization

Unseen Object

Safety and Real-time Performance

Arm Motion Control: Bunny-VisionPro vs AnyTeleop+

Collision Avoidance

Comparing Wearable Devices for Hand Pose Tracking and Robot Retargeting

More Teleoperation Tasks

Haptic Feedback Tasks

Opening Cabinet

Cleaning Whiteboard

Kitchen Tasks

Motion-constrained Tasks

Dynamic Tasks

BibTex

@article{bunny-visionpro,

title = {Bunny-VisionPro: Real-Time Bimanual Dexterous Teleoperation for Imitation Learning},

author = {Runyu Ding, Yuzhe Qin, Jiyue Zhu, Chengzhe Jia, Shiqi Yang, Ruihan Yang, Xiaojuan Qi, and Xiaolong Wang},

year = {2024}

}

If you have any questions, please feel free to contact Runyu Ding.